BORT a Virtual Assistant - BOT

“BORT” is a structure of recognizing intentions for a Virtual Assistant. It combines the Neural Network BERT of Google and Naive Bayes Algorithms with CountVectorizer to understand the intention of the user.

In collaboration with:

Virtual Assistant vs. Chatbot

A chatbot is a simple bot who has commands to activate responses or functions. Our idea was to set a bot that can be integrated in a fluid conversational chat. The bot only interacts with others when it understands that the users need some help or want to listen a joke. To interact with it, it´s not necessary to summon it, because it is always listening and understanding. Because of this, it’s not just a bot it is a real virtual assistant who helps you whenever you need.

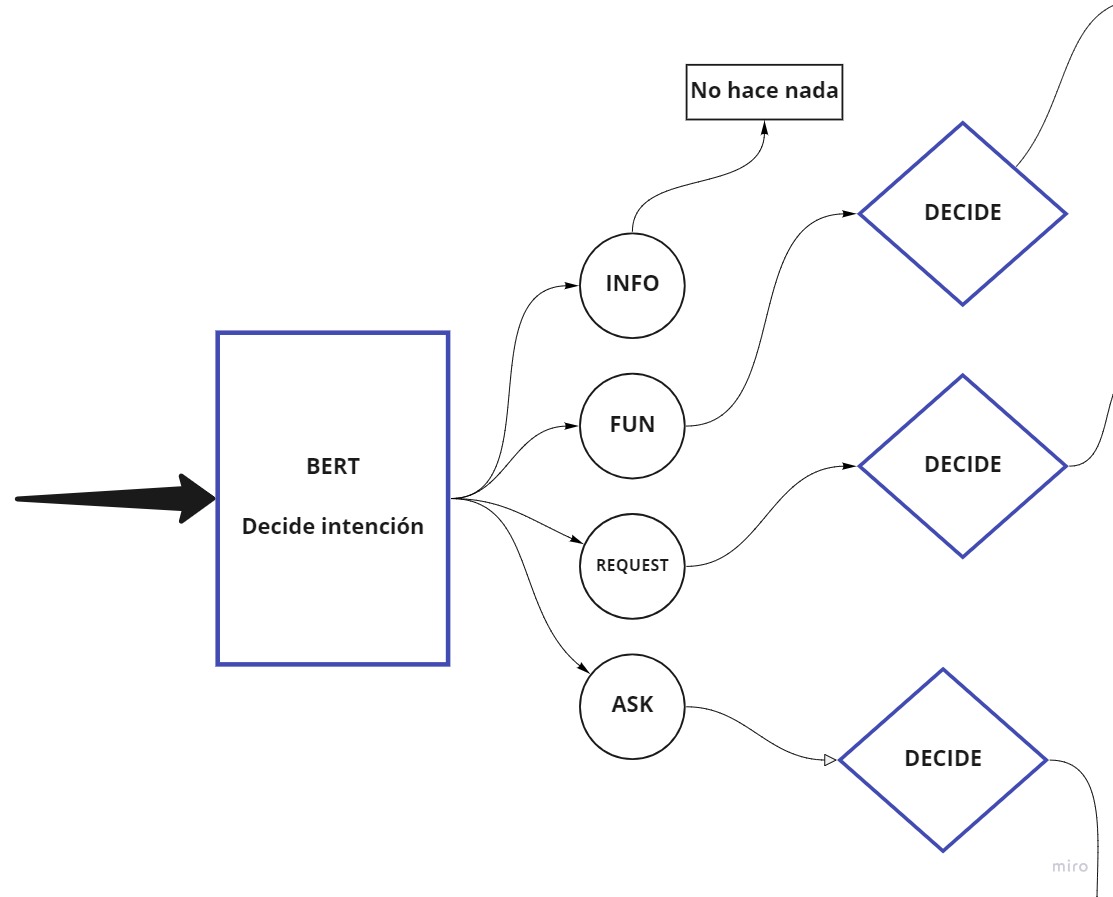

Basic structure

The structure of BORT is meant not only to be a chat bot it can be useful in some many ways, because it has a modular structure.

When the input is recepted it pass for two layers to be understood. The first layer is a multilabel classification with multilingual BERT. The neural network was trained to recognize the basic intention of the input. When the basic intention is detected the input pass to another classification with CountVectorizer and a Naive Bayes Model. This model classifies in the semantics of the intention within the possible sections or answer to the intention preset for the VA. Then the VA acts according to the intention identified.

MODELS

Multilingual Classifier BERT

BORT pre-classifies in four intentions: Informative, Requests, Questions, and Fun. Multilingual BERT was pretrained in 104 languages with the content of Wikipedia. Bert was pretrained without a label, it only was trained to predict the next sentence, or masked words. We trained MB with original phrases labeled with these intentions.

Naive Bayes and CountVectorizer

BERT is great finding the basic intention, but to process the semantics of the Phrase CountVectorizer works better. It vectorize the words and calculate the frequencies of the lemma with a TF-IDF calculus. The Naive Bayes model classifies into the multiple responses presets for that intention.

This division in two models makes BERT pay more attention in the syntax of the input and the Naive Bayes more in the Semantics. Combined can predict the intention of the user with an accuracy superior to 90%.

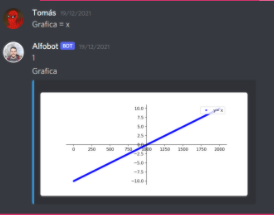

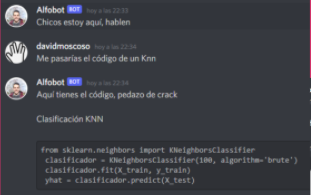

Alfobot an instance of BORT

We set a first instance of BORT in a Discord BOT. Alfobot was made in the image and likeness of Alfonso D. Blazquez a Data Science teacher. Alfobot have 13 functions related with learning Machine Learning and Deep Learning. It can help you providing code of the models or helping optimizing your model. One special feature of Alfobot is the jokes it can answer, because it was designed with a recommendation algorithm. Alfobot is now running freely in three Discord Servers.

Models of Alfobot

We trained BERT with all the conversation in a server of Discord. And the Naive Bayes with the same phrases but with the specific function associated with the phrase.

The recommendation algorithm extracts the lemmas of the words of each sentence of the input and search in the data base of possible answers. It creates a pool of phrases that matches a 80% of the lemmas. Then it selects the answer with a weighted random algorithm, where the weights are calculated with the historic response to that phrase. It takes into account how much the users laugh, the reactions and how much was used that phrase previously.

Project link: https://github.com/evilelmo-corp/alfobot